Making Compilers and Program Analysis Declarative

Modern compilation pipelines (compilers, optimizers, program analysis tools) consume, transform, and produce abstract syntax trees. If you squint a little, these processes start to look a lot like database queries, updates, and views. The ODIn Lab is looking at ways to leverage expertise from the database community to improve the performance of compiler tool chains, while at the same time making it easier to write compilers and program analyses by decoupling the core specification logic from logic aimed at making the compiler faster.

Draupnir💾📜

Multiple efforts in the PL community have adopted Datalog for compiler specification, due to its ability to easily define recursive properties. However, datalog also conflates identity and value, and as a result it struggles with aggregation. Draupnir is a compiler for a datalog-adjacent language that draws on group-/ring-theoretic provenance models (e.g., Semiring Provenance, Ring Databases) to capture aggregation.

DiDB

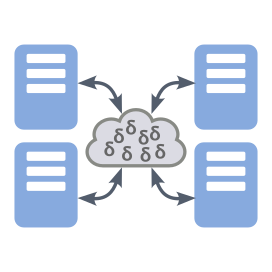

Incremental computation produces a unique data access pattern that is highly predictable. The DiDB (Distributed Incremental Data Base) project is exploring ways to leverage the predictability of these access patterns to minimize the overhead of concurrent updates.

Automating Re-usable and Reproducible Data Science

Data Science is frequently an iterative process, with scientists frequently revisiting and past efforts and adapting them for new research goals. A key challenge in this process is ensuring that efforts are re-used safely. Just like compilers for strongly typed languages help users understand the potential implications of changes in their code, the ODIn lab is looking to develop similar techniques to assist data scientists in developing safe, re-usable, and reproducible data science pipelines.

Vizier🕸️💾📜

Notebooks are a convenient tool for data exploration, allowing users to rapidly explore datasets, while preserving a record of the steps they took to get to their results. However, the notebook metaphor takes several design shortcuts in the name of efficiency that make it difficult to create safely reusable notebooks, and that create confusion for novice users. Vizier is the prototype of a new "workbook" metaphor that presents a notebook style interface to a "microkernel workflow system", which keeps cell results up-to-date, and doesn't have a kernel that needs to be re-started and re-populated.

Drag, Drop, Merge📜

Over the course of a multi-year (longitudinal) study, as social sciences researchers gain an improved understanding of the study's domain and subjects, it may be necessary to make incremental changes to the study's data collection procedures. Drag, Drop, Merge (DDM) is a set of tools for working with data collected under evolving methodologies, allowing study designers to flag differences between study iterations, and allowing researchers to quickly select the subset of the data that is appropriate for their analysis.

Safe, Efficient Management of Uncertain Data

Ambiguous, incomplete, conflicting, and otherwise uncertain data is difficult to use safely, often requiring days or even weeks of careful study to understand the uncertainty's implication on downstream analyses. Exploration, and re-use of uncertain data pose a particularly significant challenge, as it becomes easy to lose track of assumptions made during one phase of a data science project. The ODIn lab is exploring how uncertainty in data can be precisely modeled without impeding a researcher's ability to explore or analyze their data.

Mimir💾📜

Mimir adds support for uncertain data to Apache Spark by using lightweight annotations called "Caveats" that are propagated through queries. Caveated data and records can carry additional metadata (e.g., textual descriptions of uncertainty, or details about the scope of the uncertainty), but this information is not propagated during normal query evaluation. By only propagating the presence of a caveat in a query result, the performance impact on query evaluation is minimal, and the content of the resulting caveats can be subsequently derived through a form of program slicing applied to queries.

FastPDB💾📜

Although probabilistic databases (PDBs) are incredibly important for helping data scientists keep track of uncertainty and ambiguity in their data, existing PDBs can also be orders of magnitude slower than normal database engines. FastPDB is the first system to couple a PDB with an Approximate Query Processing engine, providing accurate approximations of probabilistic query results with competitive runtimes.

Uncertainty Annotated Databases📜

The UADB project, in collaboration with UIC and Nanjing Tech, is a lightweight model of incomplete data based on ranges. Instead of attempting to produce exact results, UADBs instead answer queries with bounds (both lower and upper) on the space of possible results. In addition to providing more valuable feedback for users, these bounds can be relaxed to improve performance.

Paused and Completed Projects

DBToaster🕸️📜

DBToaster is an SQL-to-native-code compiler. It generates lightweight, specialized, embeddable query engines for applications that require real-time, low-latency data processing and monitoring capabilities. The DBToaster compiler generates code that can be easily incorporated into any C++ or JVM-based (Java, Scala, ...) project. Since 2009, DBToaster has spearheaded the currently ongoing database compilers revolution. If you are looking for the fastest possible execution of continuous analytical queries, DBToaster is the answer. DBToaster code is 3-6 orders of magnitude faster than all other systems known to us.

Ettu📜

One of the greatest threats to a the security of a database system comes from within: Users who have been granted access to data using it in a malicious or illegitimate way. Our goal is to develop new types of statistical signatures for a user or role's behavior as they access a database. Using these signatures, we can identify non-standard behavior that could be evidence of malicious activity.

Jxplain

Ad-hoc data models like Json simplify schema evolution and enable multiplexing various data sources into a single stream. While useful when writing data, this flexibility makes Json harder to validate and query, forcing such tasks to rely on automated schema discovery techniques. Unfortunately, ambiguity in the schema design space forces existing schema discovery systems to make simplifying, data-independent assumptions about schema structure. When these assumptions are violated, most notably by APIs, the generated schemas are imprecise, creating numerous opportunities for false positives during validation. The Jxplain system adopts a set of heuristics that effectively resolve this ambiguity.

Pocket Data📜

Mobile phone CPUs can slow down to save power. An OS component called the 'governor' chooses when and how this happens. It turns out most governors on Android are over-engineered, making predictions based on useless data. The Pocketdata project explores how we can use live information from apps to make better choices for power management.

Leaky Joins📜

One of the primary challenges in graphical models is inference, or re-constructing a marginal probability from the graphical model's factorized representation. While tractable for some graphs, the cost of inference grows exponentially with the graphical model's complexity, necessitating approximation for more complex graphs. The leaky join algorithm couples together incremental view maintenance with approximate query processing to produce an anytime algorithm for inference in a very large-scale Bayes net.

Loki📜

The Label Once and Keep It project looks at how we can incrementally curate data lakes by tracking how people integrate data from multiple sources. Like popular work on Unionability, our goal is to identify related datasets, but we also support datasets that must be transformed (or 'mapped') in order to be unionable.

Pip🕸️📜

Pip was one of the first probabilistic database systems to cope with value-level uncertainty (as opposed to row-level uncertainty). In contrast to purely sampling-based approaches like MCDB, Pip was able to produce exact analytical results in a fraction of the time.